Educational Videos

Everything you need to know about crafting effective video tutorials and online lessons. Learn how to keep your viewers engaged and focused on the subject.

How to turn blog posts into Video Tutorials using AI

All you need to know about turning your blog posts into videos with AI - from adapting blogs into scripts, to generating voiceovers and editing it all into a polished video.

Best AI Voice Changer Tools For Every Scenario – Gaming, Dubbing and More

When it comes to changing your voice, the possibilities are infinite, with the right tool! Explore the best AI voice changers of 2025 -- perfect for creators, gamers, and anyone ready to transform their voice.

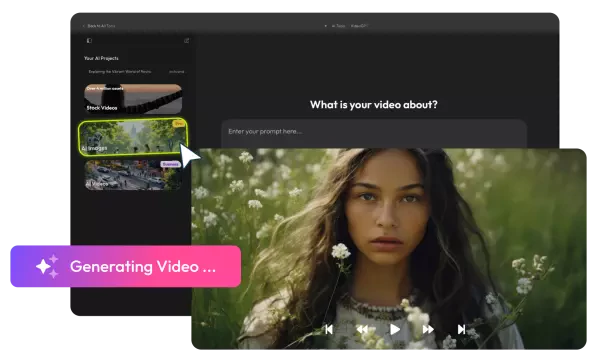

12 Best Image-to-Video AI Tools in 2025

Looking to turn your static photos into dynamic, scroll-stopping videos? This one is for you. No video editing skills needed. Just your creativity and a few clicks.

Best 13 AI Copywriting Tools for Blogs, Ads, E-Commerce & More (2025)

Think AI can’t write with flair? Explore the best 13 copywriting AI tools that can boost your creativity and fast-track any content-related projects, and you might change your mind about that.

How to Make an AI Video From a Photo (Step-by-Step Guide)

Learn how to turn your photos into an AI-powered video. Add motion, sync sound, and export in high-quality MP4 with just a few clicks, right in your browser.

Best AI Tools for Small Business in 2025 – Boost Productivity, Marketing and Growth

To be clear, you could already run a successful small business without AI, but only if you're willing to accept significant competitive disadvantages. Now, thanks to these accessible AI tools for entrepreneurs, you can compete at a level previously reserved for businesses with much deeper pockets. The democratization of AI has perhaps done more to level the playing field for small businesses than any technological advancement in the past 30 years.

ChatGPT Prompts for Photo Editing

Want to edit photos with ChatGPT? Get a list of effective prompts to change backgrounds, remove objects, and apply artistic styles without unwanted changes.

Full Guide on Creating an AI Influencer

Step into the future of marketing by learning how to create your own AI influencer from scratch, using cutting-edge AI tools and clever strategies.

15 AI Prompts to Upgrade Your Resume

Land your dream job with AI-powered resume prompts! Craft a standout resume, optimize for ATS, and impress human recruiters—all while keeping your personal touch.

13 AI Tools for All Graphic Design Needs

We rounded up the 13 best AI tools for graphic designers to speed up workflow, boost creativity, and bring ideas to life more easily in 2025.