Deepfake Technology – What It Is, How It Works, Where It Can Be Used

Updated on July 24, 2025

Table of contents

- What is deepfake technology?

- How do deepfakes work?

- Is a deepfake the same as a Photoshopped image?

- Common techniques and types of deepfakes

- Top ways in which deepfakes are used, and famous deepfake examples

- Is creating deepfakes illegal?

- How can you recognize a deepfake?

- Protection against deepfakes

- Pandora’s box has been opened, but there’s still a glimmer of hope

Imagine you’re scrolling through social media when you come across a video of a world leader declaring war, or your favorite celebrity doing something shocking and out of character. Even worse, what if it’s a video of yourself doing – or saying – something that you have zero recollection of? The video might look and sound real at a glance, but it is, in fact, entirely fake.

That’s the power of deepfakes and the reason why so many security experts have been warning us about deepfake attacks over the past five years. In fact, if you’ve spent any amount of time online, you probably don’t even have to imagine. With deepfakes becoming increasingly popular each year, chances are you’ve seen one already, even if it was just a harmless (and clearly labeled) celebrity AI video meant solely for entertainment.

In this article, we’re going to try and shed any confusion surrounding deepfakes. We’ll explain what they are, how they work, and explore the ways in which they can be used, as well as the things you can do to protect yourself against them.

What is deepfake technology?

Simply put, deepfake technology is a type of artificial intelligence used to create realistic fake images, videos, and audio clips of real or non-existent people.

Most commonly, the word ‘deepfake’ is used to describe AI-generated videos portraying public figures like celebrities or politicians doing or saying something that they did not actually do.

Remember that infamous AI video of Will Smith eating spaghetti?

That’s a deepfake, albeit a primitive one. The subject matter was harmless, and the video itself was too glitchy to be taken seriously, but as AI technology advances rapidly, deepfake videos are becoming a lot more convincing.

This AI-generated video was created only two years after the original one – notice the massive jump in realism between the two clips.

How do deepfakes work?

The name deepfake comes from the term “deep learning”, which is a type of “machine learning”, the same principle that modern AI is based on. However, deepfakes predate modern AI tools like ChatGPT or Deepseek by quite a few years and have much more in common with things like Snapchat filters or facial recognition software like the one used by your phone.

I’m not going to bore you with a technical explanation of machine learning, neural networks, and image generation. The truth is, you don’t need an in-depth understanding of either of those topics to create deepfakes, just like you don’t need to be a mechanic to drive a car.

Creating deepfakes is as easy as finding one of the many free tools available online, uploading a picture, video, or audio clip, and clicking Generate, which is exactly what makes them so scary.

Is a deepfake the same as a Photoshopped image?

It’s easy to look at deepfake images and think that this is just more of the same old Photoshop fakes we’ve had for the past 20 years, but the truth is that there are quite a few differences between the two:

- First off, when they’re done right, AI-generated deepfakes can look a lot more realistic than most photoshops. The technology is also rapidly evolving, and telltale signs of AI like a weird number of fingers or an extra number of teeth are no longer as prevalent, making it harder and harder to spot deepfake images.

- Deepfake technology is A LOT more accessible than Photoshop. While creating convincing Photoshops takes lots of skill and years of practice, creating a convincing deepfake can often be just as simple as downloading an app or typing in a prompt. That means that anyone with a few minutes to spend can easily create one using their computer or sometimes, even their smartphone.

Lastly, there is also the issue of deepfake videos. While deepfake images are a lot easier to create than convincing Photoshops, this gap in accessibility grows even bigger when we talk about videos. You can generate deepfake videos in minutes or hours that would have taken days or weeks of work using traditional computer-generated effects and editing methods.

Common techniques and types of deepfakes

There are as many types of deepfakes as there are types of media, but broadly speaking, deepfakes could be categorized as “Visual deepfakes” (meaning things like images or videos), and “Audio Deepfakes” ( fake voice calls, AI-generated songs using someone’s voice, and other recordings).

If you want to get further into it, you can also categorize deepfakes as:

- Face-swapping deepfakes – Deepfakes where someone’s face is over imposed on a different video or image

- Vocal deepfakes - Creating a fake audio recording or a song using someone else’s voice. This can also include real-time voice manipulation during a call.

- Body movement – Faking someone’s gestures or body movements in a video.

- Text deepfakes – Using AI to reproduce someone’s writing style and make it appear like a certain piece was written by them.

- Object manipulation - Placing an object in a video that was not there originally.

- Hybrid deepfakes - Combining one or more of the techniques we outlined above to create a faked video.

Ultimately, however, probably the most important distinction between deepfakes is whether they are malicious or benign. Malicious deepfakes are intended to cause harm, either by defaming or spreading misinformation. Benign deepfakes can be harmless or even beneficial.

Top ways in which deepfakes are used, and famous deepfake examples

The word ‘deepfake’ has an inherently negative connotation, and if we look at the history of deepfakes, it’s easy to see why.

Non-consensual pornography

The term ‘deepfake’ was coined by Reddit user u/deepfake in late 2017. The subreddit he created was dedicated to swapping female celebrities’ faces into pornographic material. Two years later, a report by cyber-security company Deeptrace showed that 96% of deepfakes found online were pornographic and featured women. The trend continued in 2023, with 98% of deepfake videos available online being pornographic, according to a report by SecurityHero.

Political Propaganda and Influence Campaigns

And non-consensual pornography is not the only way in which deepfakes are being used maliciously. Deepfake technology is often also used for propaganda, defamation, spreading misinformation, and just straight-up scamming people (or companies!).

In 2022, a deepfake video of Ukrainian president Volodymyr Zelenskyy telling his soldiers to lay down their arms and surrender the fight against Russia made the rounds on social media. Hackers even uploaded it to a prominent Ukrainian news website to give more credibility to the fake.

https://www.youtube.com/watch?v=X17yrEV5sl4

Fake images farming likes on social media

Recently, we have also seen a growing trend of seemingly innocuous Facebook pages and TikTok accounts targeting primarily elderly demographics using AI-generated content and paid bots to boost their reach and amass large audiences of real people.

Once the audiences grow large enough, the pages are sold off, and the new owners either attempt to redirect their audience to external websites or pivot to posting political propaganda during elections or times of political unrest.

The most common types of fake content spread through these pages are feel-good stories and faked photos of children standing next to sculptures they supposedly made themselves, usually out of food items such as fruits and vegetables. There’s also usually some religious element involved, which is what led to the infamous Shrimp Jesus trend of 2024, where multiple bot pages started posting AI-generated photos of Jesus made from shrimp in an attempt to maximize engagement.

Scams

Deepfake technology is also often used for scams, targeting either mass internet audiences or specific high-profile users.

Deepfaked videos of celebrities endorsing crypto scams, fake investment funds, or scam products are often used in ads across Facebook, TikTok, and Instagram. The videos usually target elderly or non-tech-savvy audiences and focus specifically on non-English speaking countries to fly under the radar of the platforms’ moderation teams.

Video caption: An example of a deepfake video using Taylor Swift’s image to promote a fake giveaway. The video directs viewers to a phishing website that would steal their personal information and charge their credit card.

While most of these videos are eventually taken down after enough user reports, they do end up getting views from real people, and the fact that these attacks continue to happen indicates that these deepfake video scams do end up generating a profit, even if they are only up for a limited time.

It’s also worth noting that a lot of the time, deepfake videos and images can be used in combination with other cyberattacks in order to increase their exposure and effectiveness.

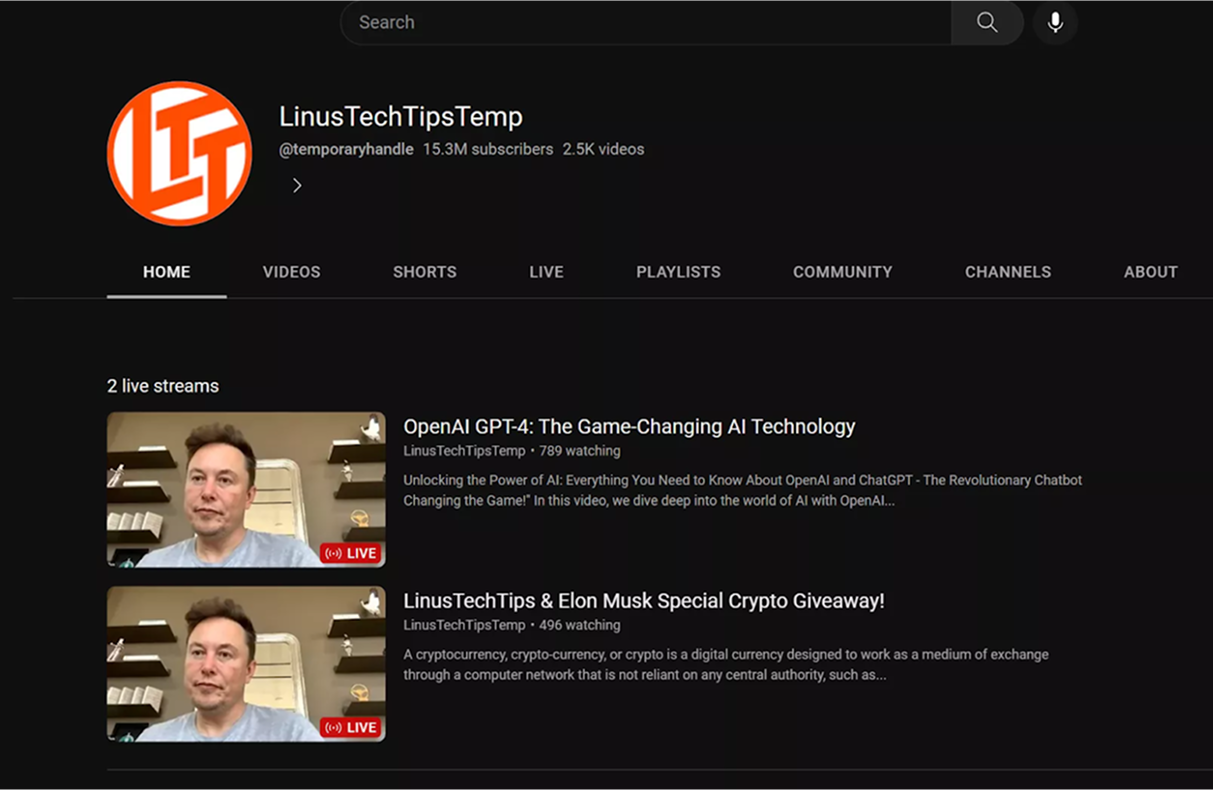

2023 saw multiple YouTube channels, including LinusTechTips, getting hijacked by hackers. After gaining access to the channels, the attackers started live streaming a deepfake video to the channels’ audience of more than fifteen million subscribers. The fake video featured Elon Musk urging viewers to visit an external website and buy a fake cryptocurrency.

Targeted Attacks and social engineering

So far we’ve looked at deepfakes that try to reach as many people as possible, but deepfake technology has also seen use in attacks that attempt to mislead specific individuals.

Just last year, a finance worker from a Hong Kong multinational was tricked into paying out $25 million dollars to hackers. The cybercriminals used Deepfake technology to assume the identity of the company’s Chief Financial Officer during a call. The fraudsters even went as far as to fake the presence of multiple members of staff during the call using deepfake videos in order to make the situation more believable.

This isn’t the only example of deepfakes being used in targeted social engineering attacks, it’s just the most complex and egregious. There have been dozens of such attacks reported over the past few years, and it looks like they aren’t stopping any time soon.

Are there any good uses of deepfake technology?

Despite the dark side of deepfake technology being a lot more prevalent online, that doesn’t mean that deepfakes can’t also be used for good. Over the years, the same technology used to scam or defame people has also seen a lot of use in entertainment, art, marketing, filmmaking, and in some cases, even in medical research.

So, without further ado, here are some positive uses of deepfakes we’ve seen over the years:

Researchers Kate Glazko and Yiwer Zheng have been using deepfakes to help people suffering from aphantasia, which is the inability to visualize images in your mind.

Hollywood has been using deepfake technology to artificially de-age actors for movies like Indiana Jones, The Avengers, or The Irishman, with varying degrees of success.

Content creators can use voice cloning for their own voice, allowing them to generate text-to-speech voiceovers that sound like them and put out videos faster even when they can’t record.

Deepfakes have also been used in educational contexts to bring historical figures to life and make lessons or museum tours more engaging for audiences.

Lastly, activists and artists have used deepfake technology to create PSA videos warning people about the importance of verifying information and the dangers of online misinformation. This viral video from 6 years ago, featuring a deepfake Obama and Jordan Peele is a perfect example of that:

On top of all this, deepfake memes have also been getting more and more popular on social media over the past couple of years, and they range from innocent entertainment (like a video of a young Elvis singing ‘Never Gonna Give You up’) to satire. However, the vast majority of this content is clearly labeled as Artificially Generated and doesn’t attempt to mislead anyone into believing that it’s real.

And speaking of labeling, this brings us to the next big question:

Is creating deepfakes illegal?

After learning about all the dangers that deepfakes can pose to individuals, governments, and institutions, it’s only natural to wonder if using this technology is still legal. And the answer, as with most things is: it depends.

While deepfake technology isn’t illegal per se in most countries, its legality depends on how you use it and the type of content you produce.

Deepfakes legality in the United States

As of March 2025, the United States does not have any federal-level laws that regulate deepfakes in any way. Several proposals aimed at addressing the use of deepfakes to create non-consensual pornography or interfere with elections have been proposed to Congress, but most of these bills are still under consideration and have not been advanced to law.

On a state level, however, things are different. Multiple states, including Texas, Florida, and California, have passed legislation to prevent using deepfakes for malicious purposes.

Deepfakes legality in the United Kingdom

In the UK, current laws regarding online safety have been amended to cover the new threats posed by deepfakes. That means that creating and sharing non-consensual nude deepfakes, for example, is already illegal under current law.

The UK government has also recently announced a proposal that would take these laws even further by criminalizing the creation of sexually explicit deepfakes (even when they aren’t shared) to close a loophole existing in the current law and pivot towards a consent-based approach.

How can you recognize a deepfake?

The first step to recognizing whether or not a video is fake should always be verifying the source. If the video seems implausible, look at the account that posted it to see if it’s an official, trusted source, or just a random user or fan page.

You can also check to see whether or not the video has been posted or reported anywhere else. The easiest way to do that would be to take a screenshot of it and then use Google’s Reverse Image Search to see if there are any other hits. If multiple sources are reporting on the event, there’s less chance for it to be fake.

Lastly, there are visual and auditory tells that can indicate that a video is a deepfake, although deepfake technology is improving at such a fast pace that they are getting harder and harder to spot.

Visual hints that a video is fake

- Blurred/soft edges - When the AI tries to overimpose someone into a frame, it often leaves soft edges that look unnatural and out of focus, especially in the face or hands, so look out for it the next time you suspect that a video might be fake.

- Unnatural hair - If the person’s hairline looks different, or if the hair looks like one solid mass without any stray hairs, this might be a sign that the video you’re watching is fake.

- Too much or too little blinking - Early AI models had problems recreating natural blinking. This has mostly been fixed today, but you can still find deepfake videos today where the subject either doesn’t blink or blinks way too often.

- An odd number of teeth or fingers - AI tools often have issues giving people the right number of teeth and fingers. If you cannot see individual teeth, or if there are too many of them, that’s a surefire sign that the video you’re watching is a deepfake. Same goes for an odd number of fingers, especially if they are blurry and seem to move unnaturally.

- Smoothed-out facial features - Face-swapping deepfakes often result in overly smooth facial features and blurry skin texture, even when the rest of the person looks crisp and in focus.

- Uneven lighting and asymmetry - If you notice any issues with the lighting, like shades that don’t make sense, or someone looking shiny even though they’re in the shadow, that’s probably the result of a deepfake. The same goes for things like different/uneven earrings.

- Poor video quality - Oftentimes fake videos will be uploaded at low quality in order to obscure any imperfections that result from editing or deepfaking. Ask yourself whether it makes sense for the video you’re watching to be in 480p in an era when even the cheapest smartphones can record crisp HD video.

Audio hints that a video might be a deepfake:

- The audio doesn’t match the movement of the lips.

- Uncharacteristic speech patterns, odd pauses, and strange vocal inflections. If the person in the video or audio clip doesn’t sound quite like themselves, that might be a sign that the video is fake. While AI is good at reproducing voices, it still falters a lot when it comes to vocal inflections and timing.

- Strange background noises – AI sometimes has difficulty reproducing natural background noise, so you should listen for out-of-place background noises, especially if they seem to cut in and out seemingly at random.

Protection against deepfakes

Now that you know what deepfakes are and how to identify them, let’s take a look at what you can do to protect yourself from them.

Deepfake detection software

Unfortunately, with deepfakes being as new as they are, deepfake detection software is still in its infancy. While there are software solutions out there that can analyze videos and determine whether or not they were digitally manipulated, these tools are designed for businesses and are not easily accessible to consumers.

Approach everything with skepticism

One way to prevent yourself from falling for deepfakes would be to implement a personal “zero trust policy”. That doesn’t have to mean doubting everyone in your life, but rather applying a healthy dose of skepticism when consuming information online, especially when dealing with things that are out of the ordinary or sound too good to be true.

Look up the news from that video you found on TikTok before you share it with your friends. See if any reputable news sources have reported on it.

Got a strange call from your boss or a coworker asking you to transfer money or give them access to an account or system? Call them back on their personal number to make sure it was really them. It might feel silly at first, but they’ll be grateful if you end up thwarting an attack.

Make use of social media privacy settings

Unfortunately, there’s no surefire way to protect against someone making a deepfake of you, but there are some things you can do.

The first would be to take advantage of social media platforms' privacy settings and ensure that only your friends and family can see your photo or video posts. This way, if someone were to use the images you share online to create a deepfake, the source of the attack would at least be easier for the authorities to track down.

Do your part and report malicious deepfakes

This is less about personal protection and more about keeping everyone safe. If you come across a malicious deepfake on social media, make sure to report it, especially if it’s a paid ad.

The more people report deepfakes, the quicker they get flagged and taken down. Your report can limit the reach of misinformation and even save people from getting scammed.

Pandora’s box has been opened, but there’s still a glimmer of hope

While deepfake technology definitely has its benefits, there’s no denying the threats it poses to our democracies, our finances, and even our bodily autonomy are too significant to ignore. Thankfully, the legal frameworks and legislation surrounding AI-generated content seem to be catching up to it.

The fact that deepfakes have become increasingly widespread on social media over the past few years has also been contributing to this. We can’t “uninvent” deepfake technology, but if more people are aware of its existence and the dangers it poses, we can make it less profitable for attackers to abuse.

Dan is passionate about all things tech. He’s always curious about how things work and enjoys writing in-depth guides to help people on their content creation journey.

How AI Works: Explained Simply

"AI is just math and statistics." Cut through the hype and learn how artificial intelligence really functions in plain language.

Full Guide on Creating an AI Influencer

Step into the future of marketing by learning how to create your own AI influencer from scratch, using cutting-edge AI tools and clever strategies.

The Deepfake Attack - What is, Prevalence, Prevention

ln 2024, deepfake fraud cost businesses $603,000 in the financial sector alone, yet most employees still can’t spot a deepfake if it CC’d them in all caps. But the cure isn’t more tech—it’s thicker skin. You should question everything. Assume everything on the internet is AI generated. Because in 2025, the deepest fake is the one you never see coming.